Seminar

-

-

MediaFutures Seminar: Translating Educational Data into Meaningful Practices: Insights from the field of Learning Analytics. Mohammad Khalil.

OnlineMohammad Khalil, senior researcher at UiB Centre for the Science of Learning & Technology (SLATE), will give a seminar on 25 November, at 12:00. TITLE: Translating Educational Data into Meaningful Practices: Insights from the field of Learning AnalyticsWHEN: Thursday 25 November, 12:00-13:00WHERE: https://uib.zoom.us/j/63125529816?pwd=OTc0MStQYUczTkEzTXVRZTlBYUpyQT09Meeting ID: 631 2552 9816Password: 8U0vAVAy ABSTRACT: Since the last decade, higher education […]

-

MediaFutures Seminar: Platform provenance analysis for digital images. Assistant Prof. Cecilia Pasquini.

OnlineCecilia Pasquini, Assistant Professor at the University of Trento, Italy, will give a seminar on 26 November, at 12:00. TITLE: Platform provenance analysis for digital images. WHEN: Friday 26 November, 12:00-13:00 WHERE: https://uib.zoom.us/j/61463829083?pwd=UmpxU3AwY0JFWDZITWppWkcxWlZTUT09 Meeting ID: 614 6382 9083 Password: 65UPcP4H ABSTRACT: The forensic analysis of image and video data that have been shared through social […]

-

-

MediaFutures Seminar: Modeling News Flows: How Feedback Loops Influence Citizens’ Beliefs and Shape Societies. Assoc. Prof. Damian Trilling.

Damian Trilling, Associate Professor at the University of Amsterdam, will give a seminar on 2 December, at 12:00. TITLE: Modeling News Flows: How FeedbackLoops Influence Citizens' Beliefs and Shape SocietiesWHEN: Thursday 2 December, 12:00-13:00WHERE: Zoom : https://uib.zoom.us/j/62205578637?pwd=azNkZ21UY3V6cE5KYTVJRGxmclZOQT09#successMeeting ID: 622 0557 8637Password: YT2sX3MZ ABSTRACT: In both public and scientific debates, many worry that the architecture of […]

-

-

MediaFutures Seminar: Automated Fact-checking: the scope of the problem in the state-of-the-art. Postdoc Fellow Ghazaal Sheikhi.

Ghazaal Sheikhi, Postdoc Fellow from MediaFutures, University of Bergen, Norway will give a seminar on 14 January, at 11:00. TITLE: Automated Fact-checking: the scope of the problem in the state-of-the-artWHEN: Friday 14 January, 11:00-12:00WHERE: Zoom https://uib.zoom.us/j/68310482035?pwd=NHR2NXhuU1lZSjFHdEZCekNxaC9yZz09Meeting ID: 683 1048 2035Password: krFzY285 ABSTRACT: Fact-checking in technical terms is the process of analyzing textual content for claim […]

-

-

MediaFutures Seminar: News diversity and recommendation systems: An interdisciplinary approach. Dr. Kristin Van Damme.

Dr. Kristin Van Damme, a senior researcher from Artevelde University of Applied Sciences, will give a seminar on 11 February at 12:00. TITLE: News diversity and recommendation systems: An interdisciplinary approach.WHEN: Friday 11 February, 12:00-13:00WHERE: Zoom - https://uib.zoom.us/j/68035691403?pwd=aGtEaVlVREx3MzFVM3diQlJGU1JCZz09Meeting ID: 680 3569 1403Password: pXe8JC3C ABSTRACT: Concerns about selective exposure and filter bubbles in the digital news environment have […]

-

MediaFutures Seminar: Visual Content Verification in the News Domain. PhD Candidate Sohail Ahmed Khan

Sohail Ahmed Khan, PhD Candidate from MediaFutures, University of Bergen, Norway will give a seminar on 25 February, at 11:00. TITLE: Visual Content Verification in the News DomainWHEN: Friday 25 February, 11:00-12:00WHERE: Zoom - https://uib.zoom.us/j/66009400805?pwd=MWpHZjdpK29TZTlSTVpZTXhDdkhSZz09 Meeting ID: 660 0940 0805 Password: qHdQfj1S ABSTRACT: The purpose of the talk is to get valuable comments and suggestions […]

-

-

MediaFutures Seminar: Detecting Fake News by Using Weakly Supervised Learning. Assoc. Prof. Özlem Özgöbek

Dr. Özlem Özgöbek, Associate Professor at NTNU, Norway will give a seminar on 17 March, at 13:00. TITLE: Detecting Fake News by Using Weakly Supervised LearningWHEN: Thursday 17 March, 13:00-14:00WHERE: Zoom - https://uib.zoom.us/j/64607939290?pwd=cStOdG90YWRjSW02RmN6TjAxakQwZz09 Meeting ID: 646 0793 9290 Password: m9hyue9C ABSTRACT: Spread and existence of fake news has been amplified by the advancements in internet and […]

-

-

UiB AI #2 But, why? – make AI answer!

Welcome to the second seminar in the UiB AI seminar series. The event is open to all employees and students at UiB. Prior registration is required. TITLE: UiB AI #2 But, why? - make AI answer! WHEN: Friday 8 April, 10:00-12:00WHERE: Universitetsaulaen, Muséplassen 3, Bergen Seminar registration Background: Artificial intelligence (AI) is increasingly involved in […]

-

MediaFutures Seminar: Fairness—Are algorithms a burden or a solution? Dr. Christine Bauer, Assistant Professor at Utrecht University

Dr. Christine Bauer, Assistant Professor at Utrecht University, will give a seminar on 21 April, at 13:00. TITLE: Fairness—Are algorithms a burden or a solution?WHEN: Thursday 21 April, 13:00-14:00WHERE: Zoom - https://uib.zoom.us/j/66369080035?pwd=MDFzdmV6TUdCVVZlZnhsNWc1eHlMUT09 Meeting ID: 663 6908 0035 Password: F9fN181n ABSTRACT: Recommender systems play an important role in everyday life. These systems assist users in choosing products […]

-

-

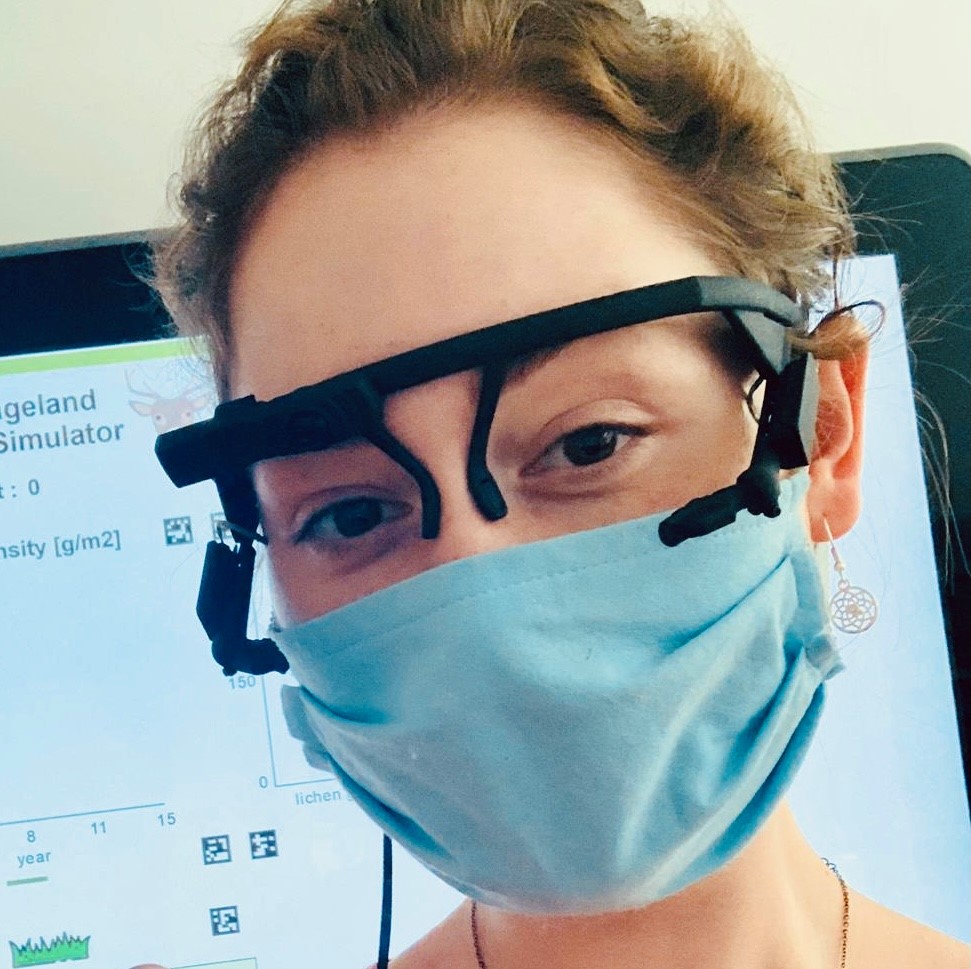

Human-Computer Interaction lecture series: Visual Attention in Face-to-Face and Computer-Mediated Interactions. Katarzyna Wisiecka, PhD Candidate in Psychology and Informatics at SWPS University & Polish-Japanese Academy of Information Technology.

Katrzyna Wisiecka, PhD candidate in psychology and informatics at SWPS University & Polish-Japanese Academy of Information Technology, will give a seminar on May 4th, at 11:15. TITLE: Visual Attention in Face-to-Face and Computer-Mediated Interactions WHEN: Wednesday 4 May, 11:15-12:00WHERE: MediaFutures and Zoom: https://uib.zoom.us/j/61410167655?pwd=WFJXbGdMVXVuYk5sTDJ2bllPZ3Yydz09 Meeting ID: 614 1016 7655 Password: 9VA5L6D1 ABSTRACT: Computer-mediated interaction has become an integral part […]

-

Human-Computer Interaction lecture series: UX Research Design in Practice: Some Examples from Safety Critical Industrial Environments. Dr Duy Le, senior research scientist at VNUHCM University of Science, Vietnam

Dr. Duy Le, a senior research scientist and head of the Human-Computer Interaction division of SELab, VNUHCM University of Science, Vietnam, will give a seminar on May 4, at 14:15. TITLE: UX Research Design in Practice: Some Examples from Safety Critical Industrial Environments WHEN: Wednesday 4 May, 14:15-15:00WHERE: MediaFutures and Zoom: https://uib.zoom.us/j/69626023560?pwd=cVdKWjR1VithNUo4a1g0NTQ1OGpFdz09 Meeting ID: 696 2602 3560 […]

-

MediaFutures Seminar: Media entertainment experiences among youth on global platforms. Marika Lüders, Professor at the University of Oslo

Marika Lüders, Professor at the University of Oslo, will give a seminar on 13 May, at 12:00. TITLE: Media entertainment experiences among youth on global platformsWHEN: Friday 13 May, 12:00-13:00WHERE: Zoom - https://uib.zoom.us/j/68002953641?pwd=WGU1ZGFKUTNMZlZ0a29GaVlrWFJuUT09 Meeting ID: 680 0295 3641 Password: PEw18sgA ABSTRACT: The media and platforms taken for granted by those born since the turn of the […]