- This event has passed.

Bergen-Boston Forum

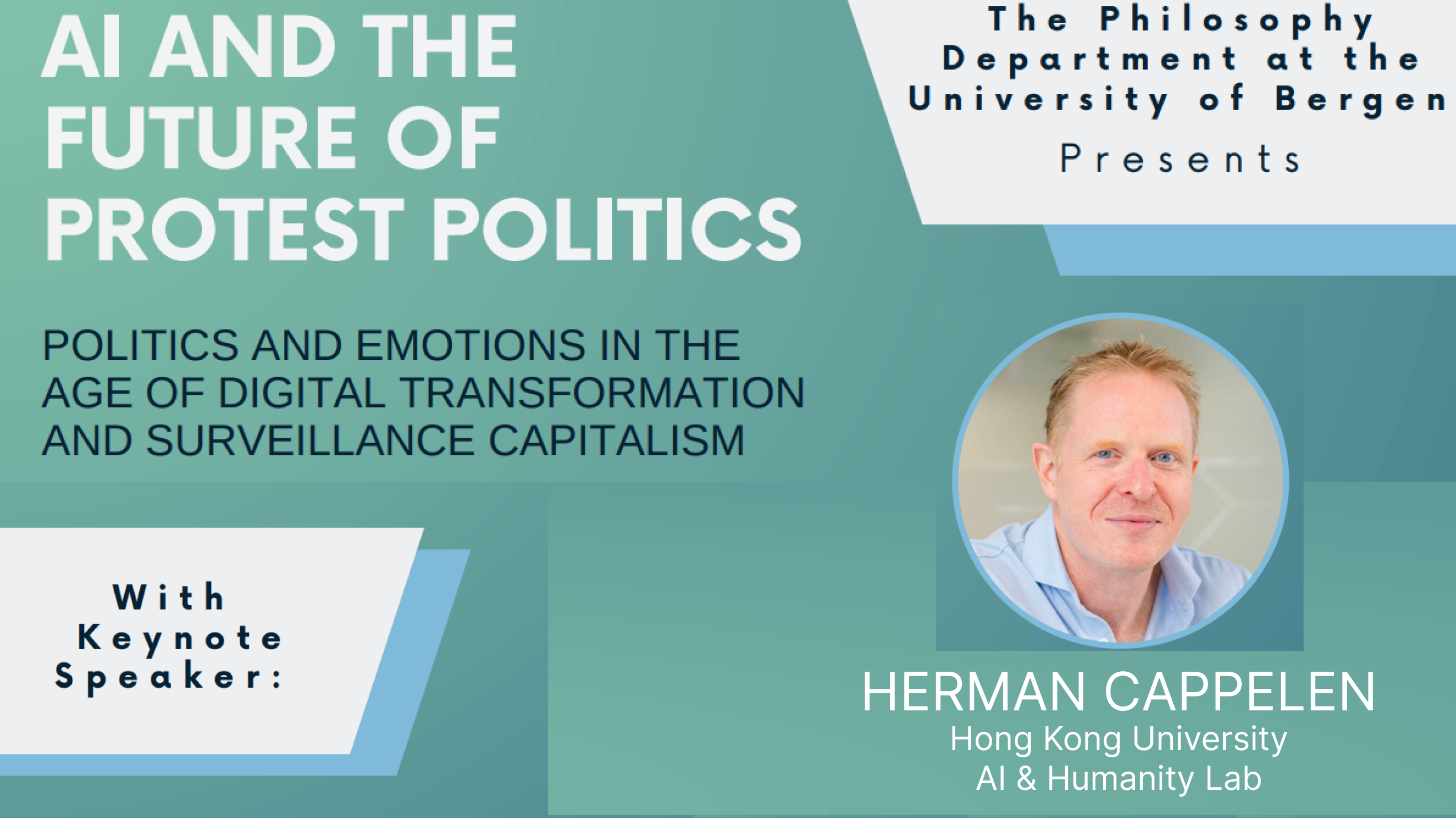

We are happy to invite to a two-day hybrid workshop about AI and the Future of Protest Politics: Politics and Emotions in the Age of Digital Transformation and Surveillance Capitalism.

Our goal is to bring together prominent scholars from different disciplines to discuss the impact of AI-based platforms, and their underlying technological and economic principles, on political discourse and protest.

The event will draw on the Boston-Bergen Forum on Digital Futures—an international research network among the ‘Culture, Society & Politics’ and the ‘Practical Philosophy’ research groups at UiB’s Philosophy Department, the Applied Ethics Center at UMass Boston, and the MIT Program Human Rights and Technology.

MediaFutures center director Christoph Tratter is co-leading the project together with Professor Franz Knappik (Bergen), Dr. Christopher Senf (Bergen) and Professor Nir Eisikovist (UMass).

WHERE: The Philosophy Department in Bergen, Sydnesplassen 12/13, seminarrommet i 1. etasje

As it is a hybrid event, you can join the event in person or via zoom.

Register here

1st Workshop Day, Thursday, November 30th |

|

| 09.15 am | Welcome Note |

Section I: Making AI Intelligible |

|

| 09.30 am | Keynote by Herman Cappelen (Hong Kong, online): “AI and The Commodification of Meaning” |

| 10.15 am | Q&A (Moderation, Jesse Tomalty) |

| 11.00 am | Rosalie Waelen (Bonn): “The Struggle for Recognition and AI’s Impact on Self-development” |

| 11.30 am | Q&A (Moderation, Chris Senf) |

| 12.30 am | Lunch break at Café Christie |

| Section II: Contesting the Attention Economy | |

| 13.45 pm | Sebastian Watzl (Oslo): “What is Wrong with How Attention is Commodified?” |

| 14.30 pm | James Williams: “tba” |

| 15.00 pm | Q&A (Moderation, Alec Stubbs) |

| Section III: Algorithms of (In)justice | |

| 15.45 pm | Kjetil Rommetveit (Bergen): “(How) Can you code rights and morality into digital infrastructures and AIs?” |

| 16.15 pm | Alec Stubbs (UMass, Boston): “Generative AI and the future of Work” |

| 16:45 pm | Q&A (Moderation, Carlota Salvador Megias) |

| 17.30 pm | End |

2nd Workshop Day, Friday, December 1st |

|

| 10.00 am | Welcome Note |

Section I: Political Technologies |

|

| 10.15 am | Eugenia Stamboliev (Vienna): “Protesting the Classification of Emotions (affects) and its Technological means” |

| 10.45 am | James Hughes (UMass, Boston): “Communication Technologies: Hegemonic, Radicalizing and Democratic” |

| 11.15 am | Q&A (Moderation, Alec Stubbs) |

| 12.15 am | Lunch break at Café Christie |

| Section II: Future of Protest Movements | |

| 13:30 pm | Paul Raekstad (Amsterdam): “Domination Without Dominators: The Impersonal Causes of Oppression” |

| 14:00 pm | Christopher Senf (Bergen): “Algorithmic Exploitation of Recognition” |

| 14.30 pm | Q&A (Moderation, Ane Engelstad) |

| 15.15 pm | Coffee break |

| Section III Activism and Philosophy in the Age of AI |

|

| 15.30 pm | Maria Brincker (UMass Boston, online): “What kind of space is a ‘platform’ with its own goals?” |

| 16.00 pm | Kade Crockford (ACLU Massachusetts & MIT Media Lab, online): “All Politics is Local: Fighting Face Surveillance from the Ground Up in Massachusetts” |

| 16.30 pm | Q&A (Moderation, Chris Senf) |

| 17:30 pm | End |

Abstracts:

1) Herman Wright Cappelen (Hong Kong)

“AI and the Commodification of Meaning”

AI systems, owned by private corporations, will soon have the ability to control the meaning of the sentences we speak and interpret. This can be seen as a form of commodification of speech act content, a more serious form of commodification than e.g., artistic commodification. The determination of meaning by AI raises concerns about corporate control over language, reminiscent of Orwellian scenarios. Often, the goals behind these communicative exchanges will be foreign to individuals, who may not endorse or even be aware of them. The result is a form of meaning alienation.

2) Rosalie Waelen (Bonn)

“The struggle for recognition and AI’s impact on self-development”

Critical theories, with their focus on power dynamics and emancipation, offer a valuable basis for the analysis of AI’s social and political impact. Axel Honneth’s theory of recognition is one such critical theory. Honneth’s theory of recognition adds to the present AI ethics debate, because it shines light on the different ways in which AI reinforces or exacerbates struggles for recognition. Moreover, through the lens of Honneth’s theory of recognition, one learns how AI can harm people’s self-development. This presentation highlights some of those contemporary struggles for recognition and their (potential) impact on people´s self-development.

3) Sebastian Watzl (UiO Oslo)

“What is Wrong with How Attention is Commodified?”

Our attention is commodified: it is bought and sold in market transactions when individuals lend out the ability to control their attentional capacities in exchange (for example) for technological services. What is wrong with that? Attention markets, we argue, resemble labor markets. By drawing on the ethics of commodification and core features of attention, we show that attention markets, while not always morally wrong, carry special moral risks: because of how attention shapes beliefs and desires, subjective experience and action, they are prone to be disrespectful, alienating, and provide fertile grounds for domination. Our analysis calls for regulatory interventions.

4) James Williams

tba

5) Kjetil Rommetveit (UiB Bergen)

“(How) Can you code rights and morality into digital infrastructures and AIs?”

In 1980 philosopher of technology Langdon Winner famously asked ‘Do Artifacts Have Politics?’ This question was followed up by Latour’s (1994) and Verbeek’s (2008) analyses of technological mediation of morality. Whereas these questions were once provocative, in recent AI regulations they have become part of official governance mechanisms. In this talk I present some novel approaches to governance in the EU through, specifically the risk-based approach and the design-based approach. Situating these within a wider techno-regulatory imaginary, I provide examples of how these instruments play out in practice. I end on some critical questions: what kind of politics do in-built morality have? And what implications can be discerned for critical publics?

6) Alec Stubbs (UMass Boston)

“Generative AI and the Future of Work”

This talk intertwines André Gorz’s post-work philosophy with Herbert Marcuse’s critical theory to envision a democratized future in which generative AI serves the productive aims of society. The talk evaluates the pitfalls of generative AI in reshaping labor, including the likelihood of technological unemployment, downward pressure on wages, and deskilling of workers. The discussion also evaluates the potential of generative AI in reshaping labor, emphasizing the need for a demand for the reduction of the workweek in leftist politics and labor struggles. Central to the argument is Gorz’s imperative to redefine work’s role in a technologically advanced, equitable society.

7) Eugenia Stamboliev (Vienna)

“Protesting the classification of emotions (affects) and its technological means”

To critique affect technology, we need to politicize emotionality and affectivity newly. Today, we are witnessing the emergence of intrusive algorithmic technologies, such as AI, in our daily lives. These technologies, designed to measure and control lives, information, and data, are intended to nudge and influence our political moods and public sentiments as much as they are to measure expressions and emotions. In this talk, I will discuss the history of two types of “affect technologies” (AT) and offer some criticism on their goals and applications. First, ATs intended to measure and classify emotions and affection emerged from the cognitive turn in computer studies. While popular, these ATs are normatively problematic and flawed, but still influence the design and economic models underlying many recognition systems. Second, ATs expected to drive, manage, and influence political beliefs and public moods are underlining architectures that do more than manage emotions via technological means, but they are part of the devaluation of emotions through political campaigning. Protesting the shortcomings of ATs, means calling into question both the normative and political agendas underlying affect technologies, as well as offering new and positive approaches on affectivity that are beyond the scope of measurement and control, but remain politically crucial for democratic protest while avoiding commercial and technical exploitation.

8) James J. Hughes (UMass Boston & IEET)

“Communication Technologies: Hegemonic, Radicalizing and Democratic”

Books, radio and television all transformed political mobilization, by both elites and radicals. How different is the Internet, social media and algorithmically driven communication? Are we more likely to form radical sub-communities, each with its own reality (e.g. MAGA)? Can we envision democratic countervailing institutions emerging from the “commodification, outrageification, and gamification of protest” by platform companies? Will the algorithmic rules and required moderation included in the EU AI Act, DMA and DSA reduce ideological hegemony, improve collaboration and decrease toxicity in these environments?

9) Paul Raekstad (Amsterdam)

“Domination Without Dominators: The Impersonal Causes of Oppression”

Social movements of the last centuries have been naming and analyzing the complex forms of personal and impersonal domination that they fight to overcome. Yet current theories of domination have largely been unable to make sense of the latter. Theories of domination as being subject to the will, or arbitrary power, of another rule them out, while extant theories of impersonal domination are often unsystematic or narrowly focused. My paper tries to remedy this by developing a systematic theory of impersonal domination, distinguish some important types thereof, and show why it matters for universal human emancipation.

10) Christopher Senf (UiB Bergen)

“Algorithmic Exploitation of Recognition”

tba

11) Maria Brincker (UMass Boston)

“What kind of space is a ‘platform’ with its own goals?”

How are we to understand our political actions on surveillance and algorithm-driven for-profit platforms? Current social media platforms present users with possibilities of building vast networks and achieving massive, fast reach to highly dispersed groups. Hence, they present incredible opportunities for expanded agency, organizing, and information sharing. However, these platform ecosystems also present users with highly unusual affordance spaces, which might pose challenges to our agency. Proprietary algorithms, vast data harvesting and camouflaged behavior modification tools are used to drive platform company interests – often conflicting with those of users. We engage in political movements to shape the future, but how do our actions on these platforms in fact shape our future and our extra-situational spaces?

12) Kade Crockford (ACLU & MIT)

“All Politics is Local: Fighting Face Surveillance from the Ground Up in Massachusetts”

In 2019, the ACLU of Massachusetts launched a campaign to bring democratic control over government use of facial surveillance technology. Over the following two years, we passed eight bans on government use of face surveillance in cities and towns across the state, including in Massachusetts’ four largest cities: Boston, Cambridge, Springfield, and Worcester. We also passed a state law creating some regulations on police use of the technology statewide. During this talk, campaign leader Kade Crockford will discuss how the ACLU’s campaigners dreamed big, built a coalition, and fought from the ground up to defeat the narrative of technological determinism, and how you can do it, too.