- This event has passed.

Talks by Google Deepmind researcher

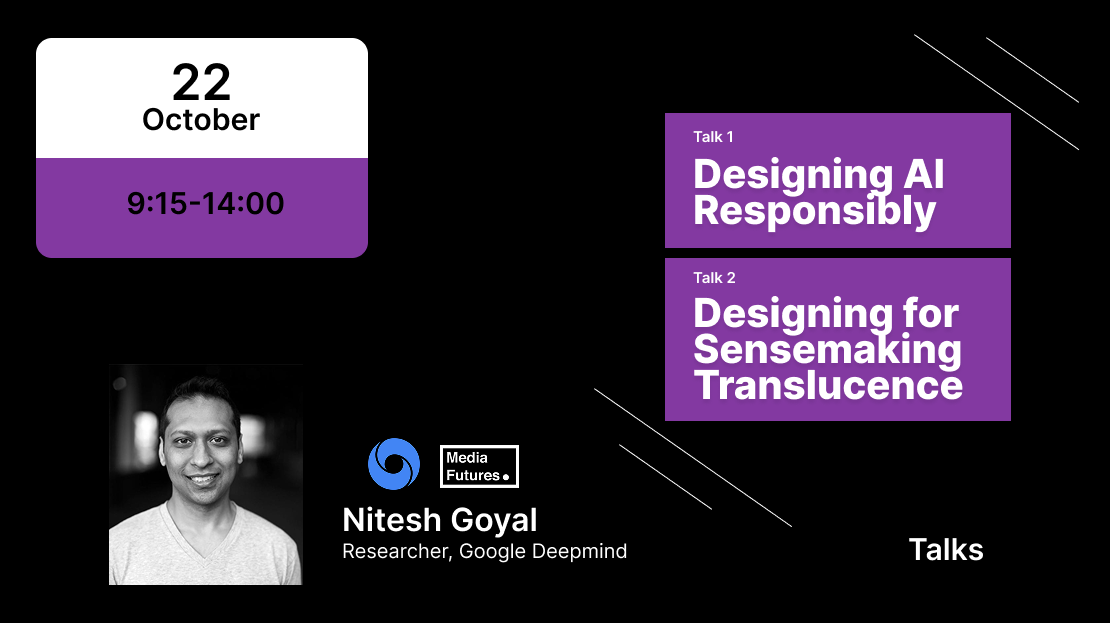

SFI MediaFutures hosts two talks with Google Deepmind researcher Nitesh Goyal, invited and introduced by work package 4 co-leader professor Morten Fjeld.

Tesh (Nitesh) Goyal leads research at the intersection of AI and Safety at Google Deepmind. His work at Google has led to the launch of ML based tools like SynthID to enable AI Literacy, AIStudio and MakerSuite to enable creatives for leveraging AI to bring their ideas to life, Harassment Manager to empower targets of online harassment, ML based moderation to reduce online toxic content production on platforms like OpenWeb, and multiple NLP based tools that reduce biased sensemaking. He received his MSc in Computer Science from UC, Berkeley and RWTH Aachen, prior to receiving his PhD from Cornell University in Information Science. His research has been supported by the German Govt., and National Science Foundation. Frequently collaborating with industry (Google Research, Yahoo Labs, HP Labs, Bloomberg Labs), he has published in top-tier HCI venues (eg. CHI, CSCW, FAccT), received three best paper honorable mention awards (CHI, CSCW) and his work is frequently covered in the press. Tesh also serves on the ACM SIGCHI Steering Committee, as appointed Adjunct Professor at New York University and Columbia University, and as ACM Distinguished Speaker.

Talk 1: Wednesday 22 October 09:15 – 10:00 : Designing AI Responsibly | Case Studies from Practice

Location: Egget/UiB Auditorium

As an HCI Researcher, my work pushes boundaries for inclusive AI/ML models. In this talk I will share case studies about building these models and challenges in their large scale adoption. Some of these models are commonly used to detect toxicity in online conversations. These models are trained on datasets annotated by human raters and require relatively large datasets. In the first case study, I will explore how raters’ self-described identities impact how they annotate toxicity in online comments. In a second case study, I will share how our collective scholarship presents a gap at evaluating Responsible AI tools that inspect such AI/ML models. I will end with recommendations for an inclusive and equitable RAI practice.

Talk 2: Wednesday 22 October 13:00- 14:00 : Designing for Sensemaking Translucence | A Crime-Solving Case Study

Location: Room Stortinget, UiB

Solving crimes correctly is a critical and life-altering problem where intelligence analysts are constantly struggling against their biases. Despite recurring themes of how AI should be designed responsibly to support these use cases/users in 50+ years of scholarship, we have barely started to scratch the surface. In this lecture, I introduce the notion of Sensemaking Translucence into biases, fairness and equity related challenges. I then provide examples of how AI can support Sensemaking Translucence. My work finally makes the case that it is important to design from a human centered perspective by leveraging AI to support these Human AI Collaboration workflows.

For questions please contact: Morten.Fjeld@uib.no