Gone are the days of solely watching sports on a single fixed device. For most fans, smartphones, designed for engagement and interaction, supplement the live game experience. They want in-game explanations, performance statistics, and the ability to share their excitement. Utilizing cutting-edge AI technology and visual recognition tools, the AiCommentator offers both interactive and non-interactive commentary, giving viewers the power to choose their experience.

Behind the so-called AICommentator there is a team of researchers from SFI MediaFutures, including PhD candidate Peter Andrews, Oda Nordberg, Frode Guribye, Morten Fjeld, Njaal Borch (Schibsted) and Kazuyuki Fujita (Tohoku University). They merged two experiences into a single mobile application using AI technology based on visual recognition tools.

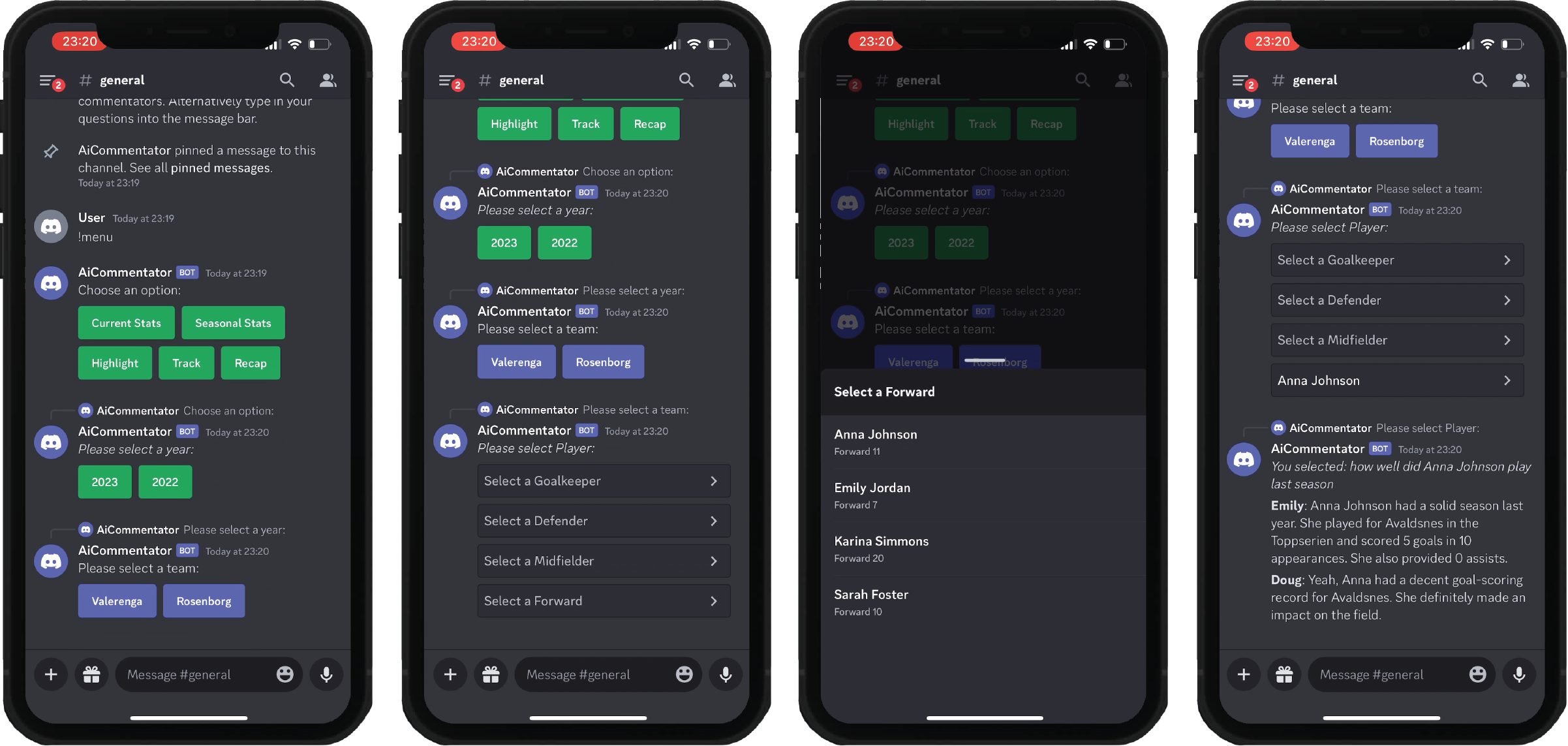

The AICommentator provides visual feedback on real-time and historical in-game statistics and player locations. This tool operates through text-based interactions with a Discord bot, offering automated sports commentary to communicate real-time game developments while engaging the users conversationally.

“With AiCommentator, we are redefining the traditional role of the commentator to create an interactive experience for viewers,” Peter Andrews says.

Method and function

The AI commentator has two interfaces: an interactive and a non-interactive. It is a multimodal conversational agent combining sound, image and textual information in one.

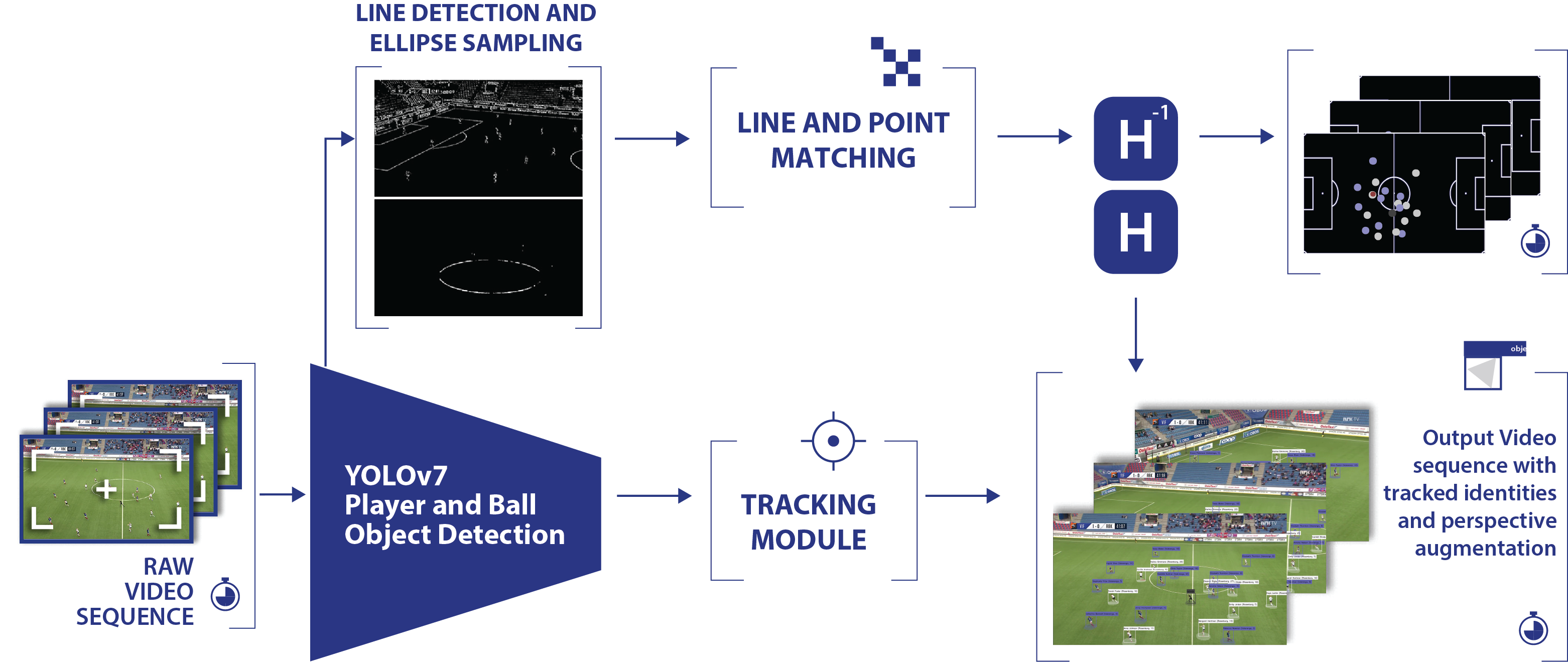

By extracting information and using computer vision and AI (e.g deep learning and natural language processing), the researchers build an interactive layer on top of the game which provides the additional information to the user. Through image computer vision and image processing techniques, AiCommentator extracts information from video and allows users to interact with this through a multi-modal conversational agent. The result is a more personalised user experience that synchronises visualisations with commentary.

AICommentator provides two modes: interactive and non-interactive. This means users can ask questions regarding player in-game and seasonal performance. The AI commentator can track players and the ball, getting locations on the top-down view. All these processes are collected as FootyVision, an all-in-one model doing the tracking and perspective transformation. The researchers took data from the FootyVision model and used it for the AI commentator to make watching football games more interactive.

“FootyVision benefits from the ability to localise players in a top-down viewpoint even when there is a lack of visual information. It outperforms prior tracking algorithms, setting a comprehensive benchmark for other people doing football tracking”, Andrews says.

The researchers conducted a user study on how such systems enhance the viewers enjoyment and influence their understanding of the game. Sixteen participants engaged with both interactive and non-interactive modes, revealing a clear preference for the interactive mode, which provided a heightened sense of immersion and satisfaction. Moreover, results showed that the interactive mode assisted users in their understanding of players on the pitch and in-game developments.

“Users reported that while the interactive mode was novel, they would like a hybrid version that allowed for both interactive and non-interactive viewing”, Andrews says.

Andrews will look at how to balance these two things in future work. So far, he found out that the automated one gives too much information, and it is hard to concentrate on one experience with the voices, according to users. While the interactive mode delivered on-demand information resulting in a more controlled user experience.

“Both modes assisted users in their understanding of players on the pitch and in-game developments. However, the interactive mode slightly outperformed, likely due to its on-demand nature. This interactive approach is not limited to football but can be applied to various games, particularly beneficial when unfamiliar with the teams. Its interactive nature facilitates quicker learning, potentially enticing more people to engage with content. Many participants expressed interest in using the system for future game watching”, Andrews remarked.

Future

Previous studies focused on information extraction and comparison among young adults watching games. Another study emphasized user interaction and the prototype of non-interactive commentary. In the future, Andrews aims to apply the system to an entirely different medium (politics) to assist users in understanding political debates and facilitating question asking.

- Pete Andrews, Oda Elise Nordberg, Morten Fjeld, Dr Njål Borch, Frode Guribye, Kazuyuki Fujita, Stephanie Zubicueta Portales. AiCommentator: A Multimodal Conversational Agent for Embedded Visualization in Football Viewing IUI ’24.

- Peter Andrews, Njaal Borch, Morten Fjeld. FootyVision: Multi-Object Tracking, Localisation, and Augmentation of Players and Ball in Football Video. ACM ICMIP.

- Peter Andrews, Oda Elise Nordberg, Njål Borch, Frode Guribye, and Morten Fjeld. 2024. Designing for Automated Sports Commentary Systems. In Proceedings of the 2024 ACM International Conference on Interactive Media Experiences (IMX ’24). Association for Computing Machinery, New York, NY, USA, 75–93.