The MediaFutures Annual Meeting 2025 gathered 140 researchers, industry partners, and technology experts to discuss new developments in artificial intelligence for the media sector. This year’s theme, “Navigating Uncertainty with AI: Battling Misinformation and Empowering Users,” highlighted both the potential and the challenges AI poses for journalism, communication, and creative industries.

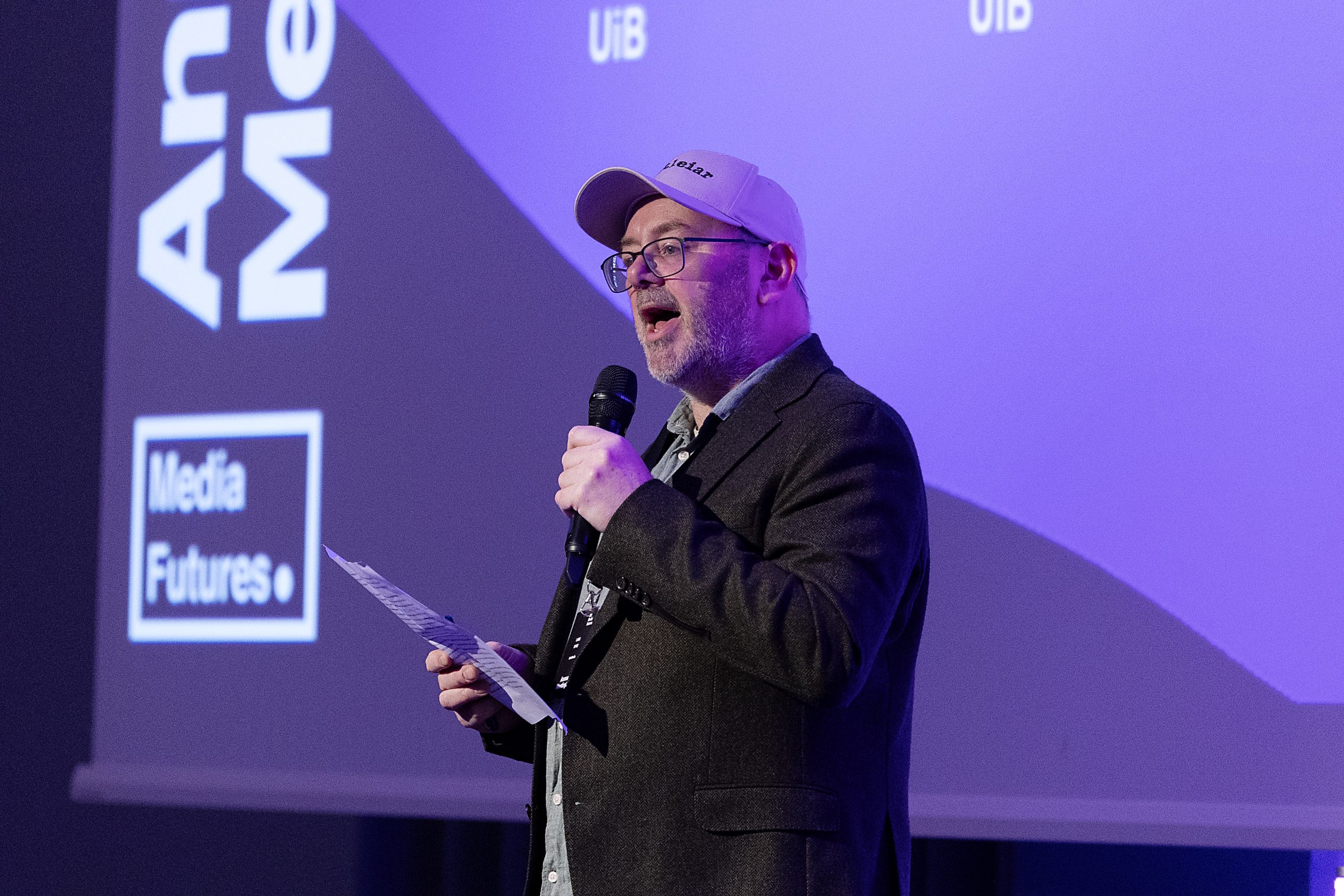

The meeting started with opening words by the Deputy Leader of the Infomedia Institute at UiB, Lars Nyre, Synnøve Kristine N. Bendixsen, Dean of the SV Faculty, Christian Birkeland, Chief Digital Officer at TV 2, and Christoph Trattner, Director of MediaFutures, cherishing the collaboration between academia and industry.

Expanding Capabilities and Creativity

The first keynote, by Cornelia Bjørke-Hill from Microsoft Norway, focused on using AI to support workflow and improve efficiency. She warned that human capacity has reached its limits: “Statistically, every second minute we are getting interrupted at work and have less time to focus, so we need support from AI. People often turn to AI because it’s fast, always available, and full of ideas.”

She shared practical examples from her daily work, including prompts and AI agents for tasks like social media posts and press releases. Her final advice was simple: “Whatever you use AI for, always do a final check before you use the content it creates.”

Following her, Alex Connock explored the creative possibilities AI opens for media professionals. He urged companies to rethink old habits before disruption forces them: “We must disrupt ourselves, because a big disruption is coming anyway.”

He emphasized that AI should be central to any media strategy: “The only value in your business is what the AI cannot do.”

Connock also highlighted emerging opportunities, from restoring old films to translating ancient scrolls, while cautioning against misuse and profiling. He predicted that by 2026, AI agents handling entire creative workflows would become mainstream.

Technology in Action

Speakers from Google, Microsoft, and Schibsted offered perspectives on AI in today’s media landscape.

Zhixian Bao from Google Norway highlighted the rise of multimodal AI: “Multimodality is a key trend in 2025. Forty percent of our time goes to finding information.”

Maxim Salnikov from Microsoft reminded the audience that humans must remain in control: “We humans control the agents. We set the direction. Success starts not with technology but with company culture.”

From a media perspective, Victorina Demirel from Schibsted noted how AI platforms are changing user habits: “People may no longer go to the original news source. We want to experiment responsibly, be transparent, and advocate for ethical clarity. I believe truth, trust, and technology can and must coexist.”

A deeper look at bias, trust and human signals

Personalisation, newsroom tools and new routines

Editors speak openly about real newsroom challenges

A panel of editors from DN Media Group, TV 2, Bergens Tidende, Amedia, and Schibsted discussed how their newsrooms are using AI and what challenges they face. While enthusiasm for AI was high, the conversation also highlighted practical and ethical challenges.

Chris Ronaldsen from TV 2 noted that discussions often focus more on AI benefits than difficulties, and Jan Stian Vold from BT observed that many journalists still struggle to use new tools effectively. Magnus Aabech stressed the importance of prioritizing user needs over trends, while Victorina Demirel and Erik Bonesvoll underscored responsible implementation, trust, and transparency.

Celebrating new talent

The day concluded by highlighting emerging researchers. Sixteen posters and four demos were presented, and both the scientific jury and the audience voted after one-minute pitches.

The winners were Yuki Onishi for her gaze-tracking project with Vizrt and TV 2, and Khadiga Seddik for her work on personalizing headline styles with LLMs.

The MediaFutures Annual Meeting 2025 demonstrated the centre’s continued commitment to developing responsible and human-centred AI solutions for the media sector. By combining academic research, technological innovation, and industry collaboration, MediaFutures aims to support a more informed, resilient, and trustworthy media ecosystem.

The centre thanks all speakers, partners, and participants for their contributions and looks forward to welcoming them again in 2026.

Centre Director Christoph Trattner:

“I am impressed by how much interest our MediaFutures Annual Meeting attracts, how professional we have become in organizing these meetings, and how many internationally recognized high-profile speakers choose to come and share their insights and knowledge on responsible AI — and do so entirely for free. Wow.”